This is the article that can give you details on how you can train an object detection model using custom data and also test the trained model in Google Colab. TensorFlow-GPU allows your PC to use the video card to provide extra processing power while training, so it will be used for this tutorial. In my experience, using TensorFlow-GPU instead of regular TensorFlow reduces training time by a factor of about 8 (3 hours to train instead of 24 hours). The CPU-only version of TensorFlow can also be used for this tutorial, but it will take longer. Here I am going to use TensorFlow v1.5 and this GitHub commit (https://github.com/tensorflow/models/tree/079d67d9a0b3407e8d074a200780f3835413ef99) of the TensorFlow Object Detection API. If portions of this tutorial do not work, it may be necessary to install TensorFlow v1.5 and use this exact commit rather than the most up-to-date version.

Downloads Let’s download the software/codes required for the tutorial.

1. Anaconda

To train and get an inference model, we need an environment. We will create a virtual environment with the help of an anaconda. Download and install anaconda from here https://www.anaconda.com/products/individual

2. Models (code repository)

Download Code from git repository to train the Faster RCNN model (any object detection model having TensorFlow support) from Google.

Download the full TensorFlow object detection repository located at https://github.com/tensorflow/models by clicking the “Clone or Download” button and downloading the zip file. Open the downloaded zip file and extract the “models-master” folder directly into your own directory (c://my-projects/tensorflow1_cards) you just created. Rename “models-master” to just “models”.

Note: The TensorFlow models repository’s code (which contains the object detection API) is continuously updated by the developers. Sometimes they make changes that break functionality with old versions of TensorFlow. It is always best to use the latest version of TensorFlow and download the latest models repository. If you are not using the latest version, clone or download the commit for the version you are using as listed in the table below.

3. Trained Model

Download the trained models from the TensorFlow model zoo which are trained on the different datasets — https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf1_detection_zoo.md

They provide a collection of detection models pre-trained on the COCO dataset, the Kitti dataset, the Open Images dataset, the AVA v2.1 dataset the iNaturalist Species Detection Dataset and the Snapshot Serengeti Dataset. These models can be useful for out-of-the-box inference if you are interested in categories already in those datasets. They are also useful for initializing your models when training on novel datasets.

TensorFlow provides several object detection models (pre-trained classifiers with specific neural network architectures) in its model zoo. Some models (such as the SSD-MobileNet model) have an architecture that allows for faster detection but with less accuracy, while some models (such as the Faster-RCNN model) give slower detection but with more accuracy.

The only issue with the Faster RCNN is that it is slow.

4. Custom Code

Download the git repo of Edge Electronics to get labeled data and custom code to generate tf records for training and testing. https://github.com/EdjeElectronics/TensorFlow-Object-Detection-API-Tutorial-Train-Multiple-Objects-Windows-10

We will use this repo to get the labeled images and the code to generate the ground truth (TFRECORDS)

Env Setup (Local)

A. Create a virtual Environment with anaconda

# change dir to project folder to compile the protobuf files

cd C:\my_projects\exp\tensorflow1_cards# Assuming that we have downloaded everything required#create virtual environment

conda create -n cards_venv pip python=3.6

# Activate virtual environment

conda activate cards_venv

# Install tf GPU

#Since we're using Anaconda, installing tensorflow-gpu will also automatically download and install the correct

#versions of CUDA and cuDNN. You can also use the CPU-only version of TensorFow, but it will run much slower.

#If you want to use the CPU-only version, just use "tensorflow" instead of "tensorflow-gpu" in the below command.

#If you want to more faster execution then build the tensorflow from source

pip install --ignore-installed --upgrade tensorflow-gpu# install protobuf compiler

# TensorFlow Object Detection API uses Protocol Buffers, which is language-independent, platform-independent,

# and extensible mechanism for serializing structured data. It’s like XML at a smaller scale,

# but faster and simpler.

conda install -c anaconda protobuf

# other needed libraries for the API

pip install pillow

pip install lxml

pip install Cython

pip install contextlib2

pip install jupyter

pip install matplotlib

# Libraries requried to prepare the data

pip install pandas

pip install opencv-python# Configure PYTHONPATH

set PYTHONPATH=c:\my-projects\tensorflow1_cards\models;c:\my-projects\tensorflow1_cards\models\research;C:\tensorflow1\models\research\slim

# Every time the "tensorflow1" virtual

# environment is exited, the PYTHONPATH variable is reset and needs to be set up again.

# You can use "echo %PYTHONPATH%" to see if it has been set or not.

set PATH = %PATH%;%PYTHONPATH%

echo %PATH%# change dir to research folder to compile the protobuf files

cd C:\my_projects\exp\tensorflow1_cards\models\research

# If you are getting error for below command then use command below it to compile proto files

protoc --python_out=.\ .\object_detection\protos\anchor_generator.proto .\object_detection\protos\argmax_matcher.proto .\object_detection\protos\bipartite_matcher.proto .\object_detection\protos\box_coder.proto .\object_detection\protos\box_predictor.proto .\object_detection\protos\eval.proto .\object_detection\protos\faster_rcnn.proto .\object_detection\protos\faster_rcnn_box_coder.proto .\object_detection\protos\grid_anchor_generator.proto .\object_detection\protos\hyperparams.proto .\object_detection\protos\image_resizer.proto .\object_detection\protos\input_reader.proto .\object_detection\protos\losses.proto .\object_detection\protos\matcher.proto .\object_detection\protos\mean_stddev_box_coder.proto .\object_detection\protos\model.proto .\object_detection\protos\optimizer.proto .\object_detection\protos\pipeline.proto .\object_detection\protos\post_processing.proto .\object_detection\protos\preprocessor.proto .\object_detection\protos\region_similarity_calculator.proto .\object_detection\protos\square_box_coder.proto .\object_detection\protos\ssd.proto .\object_detection\protos\ssd_anchor_generator.proto .\object_detection\protos\string_int_label_map.proto .\object_detection\protos\train.proto .\object_detection\protos\keypoint_box_coder.proto .\object_detection\protos\multiscale_anchor_generator.proto .\object_detection\protos\graph_rewriter.proto .\object_detection\protos\calibration.proto .\object_detection\protos\flexible_grid_anchor_generator.proto

# If error for above command then execute this.

for /f %i in ('dir /b object_detection\protos\*.proto') do protoc object_detection\protos\%i --python_out=.# Now We are ready to build and install api

python setup.py build

python setup.py install

B. Installing Tensorflow from Source with CPU Optimizations ( to speed up the execution by 3x) Read my article (for Linux) Q.5 from it from below link to build TensorFlow from the source. Other steps will remain the same. https://www.dattatrayshinde.com/single-post/how-to-setup-tensorflow-environment-for-deep-learning Also, please refer following links if you want to build the TensorFlow from the source. In this tutorial, we are not doing it.

https://www.tensorflow.org/install/source#tested_build_configurations

https://dev.to/mayurdeshmukh10/installing-tensorflow-from-source-with-cpu-optimizations-44k8

We don’t need this right now to complete this tutorial.

Data preparation (Local)

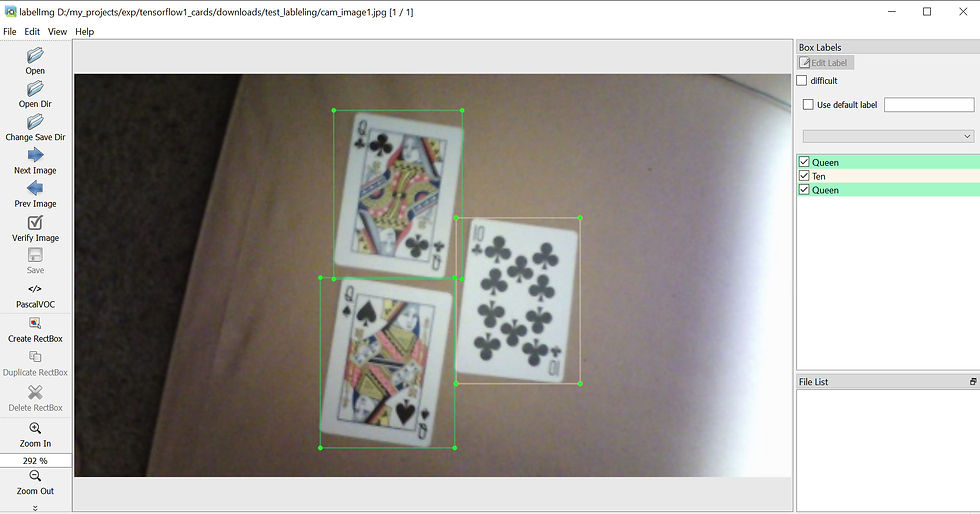

A. Image labeling with the tool To annotate images we will be using the labelImg package. If you haven’t installed the package yet, then have a look at LabelImg Installation here.

I have tried this tool locally and believe me it is very easy to use and really very helpful.

labelImg tool UI

B. Tfrecord generation

We have got already labeled files in the images folder inside C:\my_projects\exp\tensorflow1_cards\models\research\object_detection which we have copied from Edje Electronics GitHub repository. They have got the labeled files from another project. Here is the link. Around 269 images are in the train and 67 in the test folder.

#Run the below command to generate CSV from XML files to prepare data in csv format for all the training and test images

cd object_detection

python xml_to_csv.py

# now we need to generate tf_records

# TFRecord format is a simple format for storing a sequence of binary records.

# Create train data:

python generate_tfrecord.py --csv_input=images/train_labels.csv --image_dir=images/train --output_path=train.record# Create test data:

python generate_tfrecord.py --csv_input=images/test_labels.csv --image_dir=images/test --output_path=test.recordEnv Setup (Online — On Colab)

We can do the training locally on a windows machine also if you have GPU. But as google provides free GPUs/TPUs in colab environment, we will try it.

# get the tensorflow 1.x

%tensorflow_version 1.x

# In colab from runtime menu, change the runtime type to GPU and run below command

import tensorflow as tf

device_name = tf.test.gpu_device_name()

if device_name != '/device:GPU:0':

raise SystemError('GPU device not found')

print('Found GPU at: {}'.format(device_name))

print(tf.__version__)

# mount the gdrive

from google.colab import drive

drive.mount('/content/gdrive')# change the directory

%cd '/content/gdrive/My Drive/Desktop/'

# install required libraries

!apt-get install protobuf-compiler python-pil python-lxml python-tk

!pip install Cython# compile the prtoc files

%cd /content/gdrive/My Drive/my_projects/tensorflow1_cards/models/research/

!protoc object_detection/protos/*.proto --python_out=.# set the env variables

import os

os.environ['PYTHONPATH'] += ':/content/gdrive/My Drive/my_projects/tensorflow1_cards/models/research/:/content/gdrive/My Drive//my_projects/tensorflow1_cards/models/research/slim'# for every session we need to build google object detection APIS with the help of follwing commands

!python setup.py build

!python setup.py install

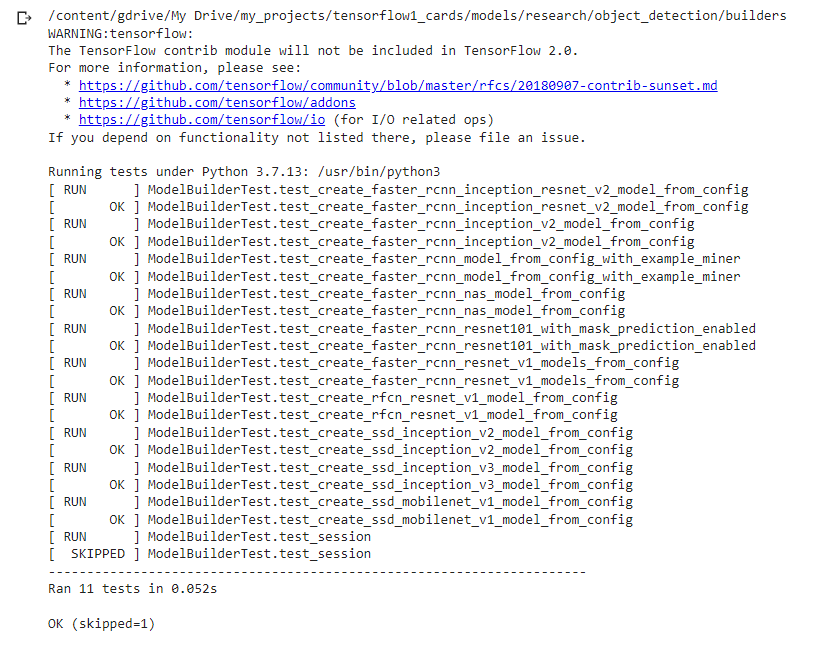

# check if the installation is proper of not. Is everything is fine?

%cd /content/gdrive/My Drive/my_projects/tensorflow1_cards/models/research/object_detection/builders/

!python model_builder_test.py# Anytime run above code to check how much time is left in colab session

import time, psutil

Start = time.time()- psutil.boot_time()

Left= 12*3600 - Start

print('Time remaining for this session is: ', Left/3600)Before starting training of the model check the following checklist -

1. The environment should be ready

The result of the command above after testing the environment is ready or not

2. tf records should be generated

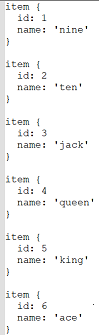

3. Labelmap should be configured properly for the classes of the objects to detect

Label Map

4. coco config files path changes should be done for the checkpoint and train-test tf records. Change the below-highlighted path along with the num_classes variable in config (should be 6 as we have 6 classes).

config

Model Training Now, Let's start training the model

%cd /content/gdrive/My Drive/my_projects/tensorflow1_cards/models/research/object_detection/

!python train.py --logtostderr --train_dir=training/ --pipeline_config_path=training/faster_rcnn_inception_v2_pets.config

Train the model for at least 60K steps and loss reach till 0.25 score.

Training LogNote — The above file has different versions with respect to the TensorFlow version.

model_main.py

model_train_tf2.py

model_tpu_main.py ( For TPU)

You need to use the training file carefully. Model Monitoring Let's monitor the model training with a tensorboard.

# Run tensorboard

%load_ext tensorboard

%tensorboard --logdir training/Use it regularly to check loss and other evaluation matrices like mAP, etc. Tensorboard

Model Inference

1. First export the inference graph to generate frozen_inference_graph.pb

!python export_inference_graph.py --input_type image_tensor --pipeline_config_path training/faster_rcnn_inception_v2_pets.config --trained_checkpoint_prefix training/model.ckpt-XXXX --output_directory inference_graph

2. Replace the XXXX in the above command with the latest checkpoint in the training folder. e.g. 24856 if you have stopped after 24856 steps of training.

3. Use the python script Object_detection_image.py already provided in the object_detection folder to run the inference. You might need to do some changes regarding CV library import.

Result

That’s it! We have successfully created and run a custom object detection model by using google object detection API in colab. Please contact me if you have any queries or need colab notebook of code…!

References

1. TensorFlow 2 Object Detection API tutorial (tf1.x versions are also available) https://tensorflow-object-detection-api-tutorial.readthedocs.io/en/latest/training.html

2. TensorFlow 2 Object Detection — To detect cards

🎬 Video 1

🎬Video 2

3. 👉 Article I — steps to update parameters of Faster R-CNN/SSD models in TensorFlow Object Detection API

4. 👉 Neptune blog — How to Train Your Own Object Detector Using TensorFlow Object Detection API

6. 🎯 Git code Repo — Edje Electronics Github Page